Introduction

In an earlier post (How to Build a Roadmap) I discussed the specific steps required to develop a defensible, well thought out road map to identify specific actions using an overall pattern all roadmaps should follow. The steps required to complete this work:

In an earlier post (How to Build a Roadmap) I discussed the specific steps required to develop a defensible, well thought out road map to identify specific actions using an overall pattern all roadmaps should follow. The steps required to complete this work:

- Develop a clear and unambiguous understanding of the current state

- Define the desired end state

- Conduct a Gap Analysis exercise

- Prioritize the findings from the Gap Analysis exercise into a series of gap closure strategies

- Discover the optimum sequence of actions (recognizing predecessor – successor relationships)

- Develop and Publish the Road Map

I have received a lot of questions about step one (1) which is understandable given the lack of details about just how to quickly gather real quantifiable objectives and potential functional gaps. In the interest of simplicity and my attempt to keep the length of the prior post manageable specific details about how to do this were omitted.

This post will provide a little more exposition and insight into one method I have found useful in practice. Done well, it can provide an honest and objective look in the mirror to successfully understand where we truly are as an organization and face the uncomfortable truth in some cases where we need to improve. The reality of the organizational dynamic and politics (we are human after all) can distort the reality we are seeking here and truly obscure the findings. I think this happens in our quest to preserve the preferred “optics” without an objective and shared method all stakeholders are aware of and approve before embarking down this path. In the worst case, if left to the hands of outside consultants alone or in the hands of an unskilled practitioner we risk creating more harm than good before even starting. This is why I will present a quick, structured way to gather and evaluate current state that can be reviewed and approved by all stakeholders before the activity even gets underway. Our objective is to develop a clear and unambiguous understanding of the current state. We should have a formal, well understood way to gather and evaluate the results.

Define Current State

First, we need to have a shared, coherent set of questions we can use to draw participants into the process that are relevant and can be quantified. This set of questions should be able to be compiled, evaluated, and presented in a way which is easy to understand. Everyone participating in the work should be able to immediately grasp where the true gaps or shortcomings exist and why this is occurring. This is true if we are evaluating Information Strategy, our readiness to embrace a SOA initiative, or launching a new business initiative. So, we need just a few key components.

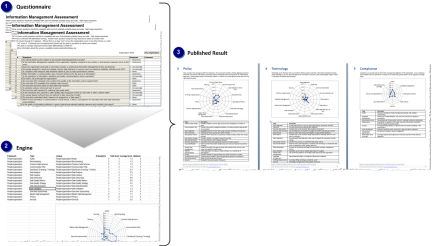

A pool or set of relevant questions that can be answered quickly by the participants and results quantified An engine to compile the results A quick way to compile and summarize the results for distribution to the stakeholders

Now this is where many of us come up short. Where do I find the questions and how to I make sure they are relevant? What is this engine I need to compile the results? And how do I quickly compile the results dynamically and publish for comment every time I need to? One of the answers for me came a few years ago when I first saw the MIKE 2.0 quick assessment engine for Information Maturity .

The Information Maturity (IM) Quick Scan is the MIKE 2.0 tool used to assess current and desired Information Maturity levels within an organization. This survey instrument is broad in scope and is intended to assess enterprise capabilities as opposed to focusing on a single subject area. Although this instrument focuses on Information Maturity I realized quickly I had been doing something similar for years across many other domains. The real value here is in the open source resource you can use to kick start your own efforts.

So what does this do?

I’m going to focus on the Mike 2.0 tools here because they are readily available to you. The MS Office templates you need can be found at http://mike2.openmethodology.org/wiki/QuickScan_MS_Office_survey.

Extending these templates into other subject areas is pretty simple once you understand how they work. The basic premise remains the same it really is just a matter of injecting your own subject matter expertise and organizing the results in a way that makes sense to you and your organization. So here is what you will find there.

Extending these templates into other subject areas is pretty simple once you understand how they work. The basic premise remains the same it really is just a matter of injecting your own subject matter expertise and organizing the results in a way that makes sense to you and your organization. So here is what you will find there.

First a set of questions organized around the following categories:

- People/Organization considers the human side of Information Management, looking at how people are measured, motivated and supported in related activities. Those organizations that motivate staff to think about information as a strategic asset tend to extract more value from their systems and overcome shortcomings in other categories.

- Policy considers the message to staff from leadership. The assessment considers whether staffs are required to administer and maintain information assets appropriately and whether there consequences for inappropriate behaviours. Without good policies and executive support it is difficult to promote good practices even with the right supporting tools.

- Technology covers the tools that are provided to staff to properly meet their Information Management duties. While technology on its own cannot fill gaps in the information resources, a lack of technological support makes it impractical to establish good practices.

- Compliance surveys the external Information Management obligations of the organization. A low compliance score indicates that the organization is relying on luck rather than good practice to avoid regulatory and legal issues.

- Measurement looks at how the organization identifies information issues and analyses its data. Without measurement, it is impossible to sustainably manage the other aspects of the framework.

- Process and Practice considers whether the organization has adopted standardized approaches to Information Management. Even with the right tools, measurement approaches and policies, information assets cannot be sustained unless processes are consistently implemented. Poor processes result in inconsistent data and a lack of trust by stakeholders.

The templates include an engine to compile the results and a MS Word document template to render and present the results. Because it is based on MS Office the Assessment_Questions.xlsx, Assessment_Engine.xlsx, and Assessment_Report.docx are linked (rather than relative, they use MS’s way –really hardcoded to find linked files in the c:\assessment folder – yikes!) so that you open and score the Assessment_Questions first, then the Assessment_Engine picks these values and creates a nice tabbed interface and charts across all six subject areas. The Word document picks this up further and creates the customized report.

You can extend this basic model to include your own relevant questions in other domains (for example ESB or SOA related, Business Intelligence). We are going to stick with the Information Maturity quick scan for now. Note I have extended a similar model to include SOA Readiness, BI/.DW, and Business Strategy Assessments.

How it Works

The questions in the quick scan are organized around six (6) key groups in this domain to include Organization, Policy, Technology, Compliance, Measurement, and Process/Practice. The results are tabulated based on responses (in the case of the MIKE 2.0 template) ranging from zero (0 – Never) to five (5 – Always). Of course you can customize response the real point here is we want to quantify the responses received.

The engine component takes the results builds a summary, and produces accompanying tabs where radar graphs plots present the Framework, Topic, Lookup, # Questions, Total Score, Average Score, and Optimum within each grouping. The MS Word document template then links to this worksheet and grabs the values and radar charts produced to assemble the final document. If all this sounds confusing, please grab the templates and try them for yourself.

Each of these six (6) perspectives is then summarized and combined into a MS Word document to present to the stakeholders.

Results

I think you can see this is valuable way to reduce complexity and gather, compile, and present a fairly comprehensive view of the current state of the domain (in this case Information Management Maturity) in question. Armed with this quantified information we can now proceed to step 3 and conduct a Gap Analysis exercise based on our understanding of what is the desired end state. The delta between these two (current and desired end state) becomes the basis for our road map development. I hope this has answered many of the questions about step one (1) Define Current State. This is not the only way to do this, but has become the most consistent and repeatable methods I’m aware of to quickly define current state in my practice.

Pingback: Current State Enterprise Architecture Analysis | Easy Architecture Fan

Pingback: Weekly Blog Post 6 – Current State Architecture – EA 872